Sqoop Tools:

Sqoop is a collection of related tools. To use Sqoop, we should specify the tool want to use and the arguments that control the tool.

Syntax:

sqoop tool-name [tool-arguments]

To display a list of all available sqoop tools:

Command:

sqoop help

Note:

Goodness is we can display the help for a specific tool by entering:

sqoop help (tool-name) or sqoop tool-name) --help

Example:

sqoop help import or sqoop import --help.

To perform the operation of each Sqoop tool:

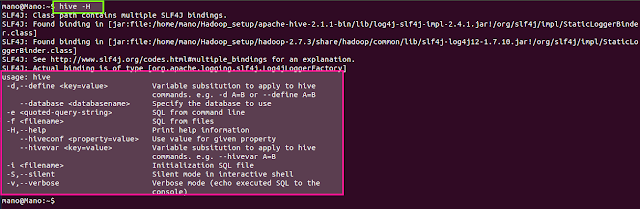

mano@Mano:~$ sqoop import --help

usage: sqoop import [GENERIC-ARGS] [TOOL-ARGS]

So, the tool-arguments are divided into two categories

1.[GENERIC-ARGS]==> These are controls the configuration and Hadoop server settings

Note:

comes after the tool name but before any tool-specific arguments (such as --connect,--username etc.,).

generic arguments are preceeded by a single dash character (-),

2.[TOOL-ARGS]==>These are related to sqoop tool which we use

Note:

tool-specific arguments start with two dashes (--), unless they are single character arguments such as -P.

Can be done through Options Files to Pass Arguments:

Step 1: Create a file on local storage area, which arguments options to pass for sqoop

Step 2: Pass the options file using --options_file argument

Syntax:

sqoop --options-file file_path;

Example:

I have used to inspect the database using eval tool

options_file.txt

#

# Options file for Sqoop import

#

eval

--connect

jdbc:mysql://localhost/sqoop_test

--username

root

--password

root

Execution:

Output:

Please follow the link for further ==>Sqoop_Page 3

Sqoop is a collection of related tools. To use Sqoop, we should specify the tool want to use and the arguments that control the tool.

Syntax:

sqoop tool-name [tool-arguments]

To display a list of all available sqoop tools:

Command:

sqoop help

Note:

Goodness is we can display the help for a specific tool by entering:

sqoop help (tool-name) or sqoop tool-name) --help

Example:

sqoop help import or sqoop import --help.

To perform the operation of each Sqoop tool:

mano@Mano:~$ sqoop import --help

usage: sqoop import [GENERIC-ARGS] [TOOL-ARGS]

So, the tool-arguments are divided into two categories

- [GENERIC-ARGS}

- [TOOL-ARGS]

1.[GENERIC-ARGS]==> These are controls the configuration and Hadoop server settings

Note:

comes after the tool name but before any tool-specific arguments (such as --connect,--username etc.,).

generic arguments are preceeded by a single dash character (-),

2.[TOOL-ARGS]==>These are related to sqoop tool which we use

Note:

tool-specific arguments start with two dashes (--), unless they are single character arguments such as -P.

Can be done through Options Files to Pass Arguments:

Step 1: Create a file on local storage area, which arguments options to pass for sqoop

Step 2: Pass the options file using --options_file argument

Syntax:

sqoop --options-file file_path;

Example:

I have used to inspect the database using eval tool

options_file.txt

#

# Options file for Sqoop import

#

eval

--connect

jdbc:mysql://localhost/sqoop_test

--username

root

--password

root

Execution:

Output: