Apache Nifi Installation on Ubuntu:

Step 1: Download from Apache Nifi website and extract Nifi package in desired directory

Apache Nifi website link to download : https://nifi.apache.org/download.html

There could be two distributions:

Screenshot for reference:

Extract the distribution:

Command: tar -xvf /home/mano/Hadoop_setup/nifi-1.6.0-bin.tar.gz

Screenshot for reference:

Step 2: Configuration

NiFi provides several different configuration options which can be configured on nifi.properties file.

At present, i'm just making change to nifi.ui.banner.text property.

Step 3: Starting Apache Nifi:

On the terminal window,navigate to the Nifi directory and run the following below commands:

i)bin/nifi.sh run:

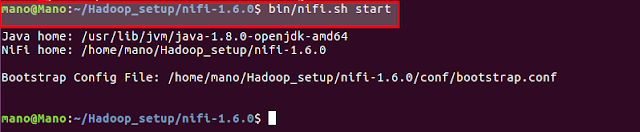

ii) bin/nifi.sh start:

iii) bin/nifi.sh status:

iv)bin/nifi.sh stop:

Step 4: Apache Nifi Web User Interface:

After Apache Nifi Started, Web User Interface (UI) to create and monitor our dataflow.

To use Apache Nifi, open a web browser and navigate to http://localhost:8080/nifi

Step 1: Download from Apache Nifi website and extract Nifi package in desired directory

Apache Nifi website link to download : https://nifi.apache.org/download.html

There could be two distributions:

- ends with tar.gz - for Linux

- ends with zip - for Windows

Screenshot for reference:

Extract the distribution:

Command: tar -xvf /home/mano/Hadoop_setup/nifi-1.6.0-bin.tar.gz

Screenshot for reference:

Step 2: Configuration

NiFi provides several different configuration options which can be configured on nifi.properties file.

At present, i'm just making change to nifi.ui.banner.text property.

Step 3: Starting Apache Nifi:

On the terminal window,navigate to the Nifi directory and run the following below commands:

- bin/nifi.sh run - Lauches the applicaion run in the foreground and exit by pressing Ctrl-c.

- bin/nifi.sh start - Lauches the application run the background.

- bin/nifi.sh status - To check the application status

- bin/nifi.sh stop - To shutdown the application

i)bin/nifi.sh run:

ii) bin/nifi.sh start:

iii) bin/nifi.sh status:

iv)bin/nifi.sh stop:

Step 4: Apache Nifi Web User Interface:

After Apache Nifi Started, Web User Interface (UI) to create and monitor our dataflow.

To use Apache Nifi, open a web browser and navigate to http://localhost:8080/nifi